Table of Contents

Study Guide 4: Scheduling

Scheduling is the process of deciding which threads are given access to resources from moment to moment.

Usually, scheduling pertains to CPU access times, but it can encompass any type of resource like network bandwidth or disk access. When discussing scheduling, we typically make some assumptions for simplification. Each user is assumed to have one single-threaded program where each program is independent of each other.

Goals and Criteria

The goal of scheduling is to dole out CPU time to optimize some desired parameters of the system. Generally speaking, scheduling policies focus on the following goals and criteria.

Minimize Completion Time

Completion time is the combination of the waiting time plus the run time of a process (e.g. time to compile a program, time to echo a keystroke in an editor). Minimizing completion time is crucial for time-sensitive tasks (e.g. I/O).

Maximize Throughput

Throughput is the rate at which tasks are completed. While throughput is related to completion time, they are not the same. Maximizing throughput involves minimizing overhead (e.g. context switching) and efficient use of resources.

Maintain Fairness

Fairness refers to sharing resources in some equitable manner. Evidently, fairness is not very well defined unless specific parameters are specified. Maintaining fairness will usually contradict minimizing completion time.

Scheduling Policies

First Come First Serve (FCFS)

First come first serve (FCFS) schedules tasks in the order in which they arrive. It’s simple to implement and is good for throughput since it minimizes the overhead of context switching. However, average completion time under FCFS can very significantly according to arrival order. Additionally, FCFS suffers from the Convoy effect where short tasks get stuck behind long tasks.

Shortest Job First (SJF) / Shortest Remaining Time First (SRTF)

Shortest job first (SJF) schedules the shortest task first. Shortest remaining time first (SRTF) is a preemptive version of SJF. If a task arrives and has a shorter time to completion than the current running task, the resource will be preempted. SJF and SRTF are provably optimal for minimizing average completion time among non-preemptive and preemptive schedulers, respectively. However, both of them involve the impossible idea of knowing how long a task is going to take.

Moreover, they do not maintain fairness with regards to the amount of time spent using a resource. In fact, SJF and SRTF can lead to starvation which is the continued lack of progress for one task due to other tasks being scheduled over it. If small tasks keep arriving, larger tasks will never get to run.

Round Robin (RR)

Round robin (RR) schedules tasks such that each of them takes turns using a resource for a small unit of time known as the time quantum \((q)\). After \(q\) expires, the task is preempted and added to the end of the ready queue. When \(q\) is large, RR resembles FCFS, becoming identical if \(q\) is larger than the length of any task. When \(q\) is small, RR resembles SJF Generally, \(q\) must be large with respect to the cost of context switching to avoid the overhead being too high.

If there are n tasks, each task gets \(1/n\) amount of resources, ensuring fairness in terms of sharing resources. No task will wait for more than \((n - 1)q\) time units.

In general, with RR, small scheduling quantum decreases response time but increases completion time, due to the extra context switching overhead and the fact that longer jobs get “stretched out”.

Lottery

Lottery gives each task a certain number of lottery tickets. On each time slice, a ticket is randomly picked. On expectation, each task will be given time proportional to the number tickets it was originally given. When distributing tickets, it’s important to make sure that every task gets at least one ticket to avoid starvation. If SRTF was to be approximated, shorter tasks would simply get more tickets than longer tasks.

Multi-Level Feedback Queue (MLFQ)

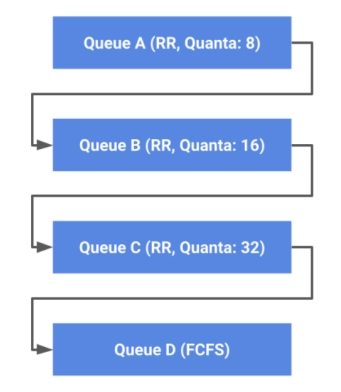

Multi-level feedback queue (MLFQ) uses multiple queues which each have different priority. Each queue has its own scheduling algorithm. Higher-priority queues (foreground) will typically use RR while lower-priority queues (background) might use FCFS.

A task will start at the highest-priority queue. If the task uses up all the resources (e.g. \(q\) for RR), it will get pushed one level down as a penalty. If the task does not use up all the resources (e.g. less than \(q\) for RR), it will get pushed one level up as a reward. This ensures that long running tasks (e.g. CPU bound) don’t hog all resources and get demoted into low priority queues while short running tasks (e.g. I/O bound) will remain at the higher-priority queues.

Round Robin

Please answer the following question with True/False. Explain your answer.

The average wait time is less than that of FCFS for the same workload.

Solution

False. This is generally not true when the time quantum is small. Consider tasks A, B, and C (arriving in this order) which each take 3 time units. With FCFS, the average wait time is 3. With RR (\(q = 1\)), average wait time is 5.

It requires preemption to maintain uniform quanta.

Solution

True. Without preemption, a task would just run forever without adhering to the quantum.

If a quantum is constantly updated to become the number of cpu ticks since boot,Round Robin becomes FCFS.

Solution

True. Quantum never gets used for any task since it always increases as the task progresses.

When using RR, cache performance is likely to improve relative to FCFS.

Solution

False. RR usually results in more context switches when compared to FCFS, meaning the cache will have more misses.

If no new threads are entering the system, all threads will get a chance to run in the cpu every

QUANTA * SECONDS_PER_TICK * NUMTHREADSseconds, assuming QUANTA is in ticks.Solution

False. There exists context switching overhead that delays the execution of threads.

Life Ain’t Fair

Suppose the following threads (priorities given in parentheses) arrive in the ready queue at the clock ticks shown. Assume all threads arrive unblocked and that each takes 5 clock ticks to finish executing. Assume threads arrive in the queue at the beginning of the time slices shown and are ready to be scheduled in that same clock tick. This means you update the ready queue with the arrival before you schedule/execute that clock tick. Assume you only have one physical CPU.

0 Taj (prio = 7)

1

2 Kevin (prio = 1)

3 Neil (prio = 3)

4

5 Akshat (prio = 5)

6

7 William (prio = 11)

8

9 Alina (prio = 14)

Determine the order and time allocations of execution for each given scheduler scenario. Write answers in the form of vertical columns with one name per row, each denoting one clock tick of execution. For example, allowing Taj 3 units at first looks like:

0 Taj

1 Taj

2 Taj

It will probably help you to draw a diagram of the ready queue at each tick for this problem.

Round robin with time quantum 3

Solution

From

t=0tot=3,Tajgets to run since there is initially no one else on the run queue. Att=3,Tajgets preempted since the time slice is3.Kevinis selected as the next person to run, andNeilgets added to the run queue just beforeTaj.Kevinis the next person to run fromt=3tot=6. Att=5,Akshatgets added to the run queue, which consists of at this point:Neil,Taj,Akshat. Att=6,Kevingets preempted andNeilgets to run since he is next.Kevingets added to the back of the queue, which now consists of:Taj,Akshat,Kevin.From

t=6tot=9,Neilgets to run and then is preempted.Tajgets to run again fromt=9tot=10, and then finishes executing.Akshatgets to run next and this pattern continues until everyone has completed running.0 Taj 10 Taj 20 Alina 1 Taj 11 Akshat 21 Alina 2 Taj 12 Akshat 22 Neil 3 Kevin 13 Akshat 23 Neil 4 Kevin 14 Kevin 24 Akshat 5 Kevin 15 Kevin 25 Akshat 6 Neil 16 William 26 William 7 Neil 17 William 27 William 8 Neil 18 William 28 Alina 9 Taj 19 Alina 29 Alina

SRTF with preemptions

Solution

Since each thread takes 5 ticks, a thread will never be preempted once begun. As a result, each thread will just execute in order of arrival (i.e. FIFO).

0 Taj 10 Neil 20 William 1 Taj 11 Neil 21 William 2 Taj 12 Neil 22 William 3 Taj 13 Neil 23 William 4 Taj 14 Neil 24 William 5 Kevin 15 Akshat 25 Alina 6 Kevin 16 Akshat 26 Alina 7 Kevin 17 Akshat 27 Alina 8 Kevin 18 Akshat 28 Alina 9 Kevin 19 Akshat 29 Alina

Preemptive priority

Solution

Even though

KevinandNeilarrive whileTajis running,Tajhas higher priority, so it runs to completion. Just asTajfinishes,Akshatarrives with a higher priority thanKevinandNeilwho are waiting, soAkshatruns for two clock ticks untilWilliamarrives with a greater priority. Similarly,Williamruns for two clock ticks untilAlinaarrives with a greater priority.Alinaruns to completion, then the remaining threads each run to completion based on priority.0 Taj 10 Alina 20 Neil 1 Taj 11 Alina 21 Neil 2 Taj 12 Alina 22 Neil 3 Taj 13 Alina 23 Neil 4 Taj 14 William 24 Neil 5 Akshat 15 William 25 Kevin 6 Akshat 16 William 26 Kevin 7 William 17 Akshat 27 Kevin 8 William 18 Akshat 28 Kevin 9 Alina 19 Akshat 29 Kevin

Bitcoin Mining

You are a Bitcoin miner, and you’ve developed an algorithm that can run on an unsuspecting machine and mine Bitcoin. You now need to write a program that will run your mining algorithm forever. While you want your mining job to be scheduled often, you also don’t want to attract too much suspicion from system users or administrators. Fortunately, you know that the machines you’re targeting use a MLFQS algorithm to schedule jobs, outlined below.

You decide that the best strategy is to guarantee that your mining job will always be placed on Queues B and C.

Assume that the CPU-intensive mining algorithm you’ve developed can be run in 10-tick intervals. Implement your mining program, and explain your design. The only functions you should use are mine (which runs for 10 ticks) and printf. Assume that your job is initially placed on Queue B.

// bitcoin mine.c void mine_forever() { while(1) { _______________ _______________ _______________ } }Solution

The program needs to exceed the Queue B quanta so that the quanta expires and the job is placed on Queue C. Once placed on Queue C, the job needs to voluntarily yield so that it can be placed on Queue B once again. By running the mining algorithm 4 times, the first 2 iterations will cause the Queue B quanta to expire. After the remaining 2 iterations, the job should voluntarily yield (e.g. print to

stdout).Note: Once the job is placed on Queue C, it will still run the 2nd iteration of the mining algorithm for 4 ticks.

// bitcoin mine sol.c void mine_forever() { while(1) { for (int i = 0; i < 4; i++) mine(); printf("Not a Bitcoin miner!!!\n"); } }

Explain why, regardless of how you implement your mining program, your job will never be placed on Queue A twice in a row.

Solution

Since the mining algorithm can only be run in 10 tick intervals, any implementation will always exceed the Queue A quanta before the CPU can be voluntarily yielded. This will cause the job to be placed on Queue B, since the Queue A quanta expired.